Autonomous Mobile Robots are moving from proof-of-concept to deployment across industries. In warehouses, they manage inventory and transport goods. In hospitals, they support sanitation and logistics. In retail, they help monitor stock levels and assist customers. A core part of this progress comes from embedded vision systems that guide how these robots perceive and respond to their environment.

Vision is no longer an optional add-on for AMRs. It enables navigation, mapping, object detection, and spatial awareness. All of these are required for robots to operate safely and accurately indoors.

Why Embedded Vision Matters

Indoor environments can be unpredictable. Aisles get rearranged. Lighting changes. People move in and out of view. To function without interruptions, AMRs need camera systems that help them recognize these changes and respond accordingly.

The choice of vision system depends entirely on the task. For example, inventory scanning may only need 2D imaging, while obstacle navigation in a crowded facility may require 3D vision with depth perception. The right setup also depends on other factors such as frame rate, field of view, lighting conditions, and how quickly data can be processed.

What Makes Integration Work

Choosing a camera is one part of the equation. AMRs also need processing platforms that can manage high volumes of imaging data with minimal delay. Compatibility with software environments like ROS, support for multi-camera synchronization, and the ability to adapt to changing workloads are just a few of the technical elements that shape integration outcomes.

As deployments scale across use cases, from patrolling restricted zones to enabling remote inspections, the connection between vision hardware and the robot’s compute platform becomes more critical.

Interested in Knowing More?

Recently, e-con Systems published a white paper called a white paper explores this in greater detail, including:

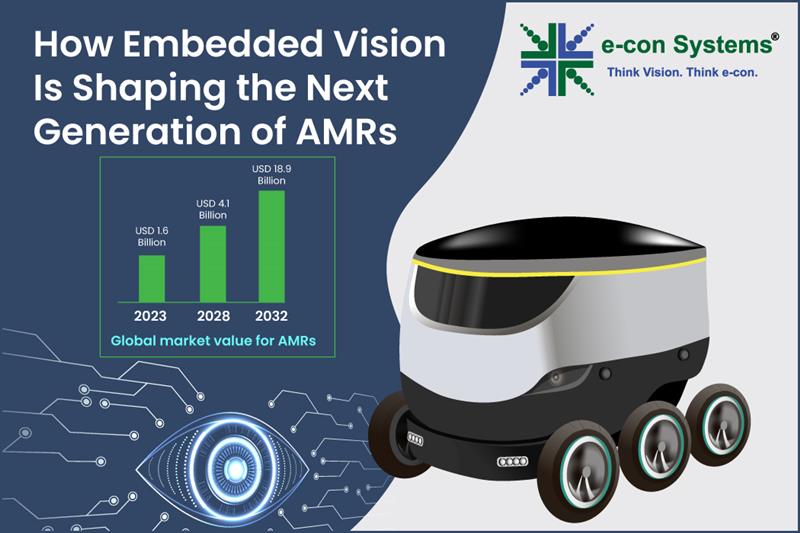

- Market trends driving AMR adoption across sectors

- The role of 2D and 3D imaging in navigation, tracking, and obstacle detection

- Selection criteria for choosing the right camera and processing setup

- A comparison of processor platforms suited for vision workloads

- Integration tips for environments with variable lighting and movement

Download the white paper here to learn how embedded vision is powering the future of AMRs.

Suresh Madhu is the product marketing manager with 16+ years of experience in embedded product design, technical architecture, SOM product design, camera solutions, and product development. He has played an integral part in helping many customers build their products by integrating the right vision technology into them.