FPGAs are ideal for video processing tasks due to their reconfigurable architecture, high performance, and low latency. They are used in applications requiring real-time data processing, high flexibility, and parallel execution.

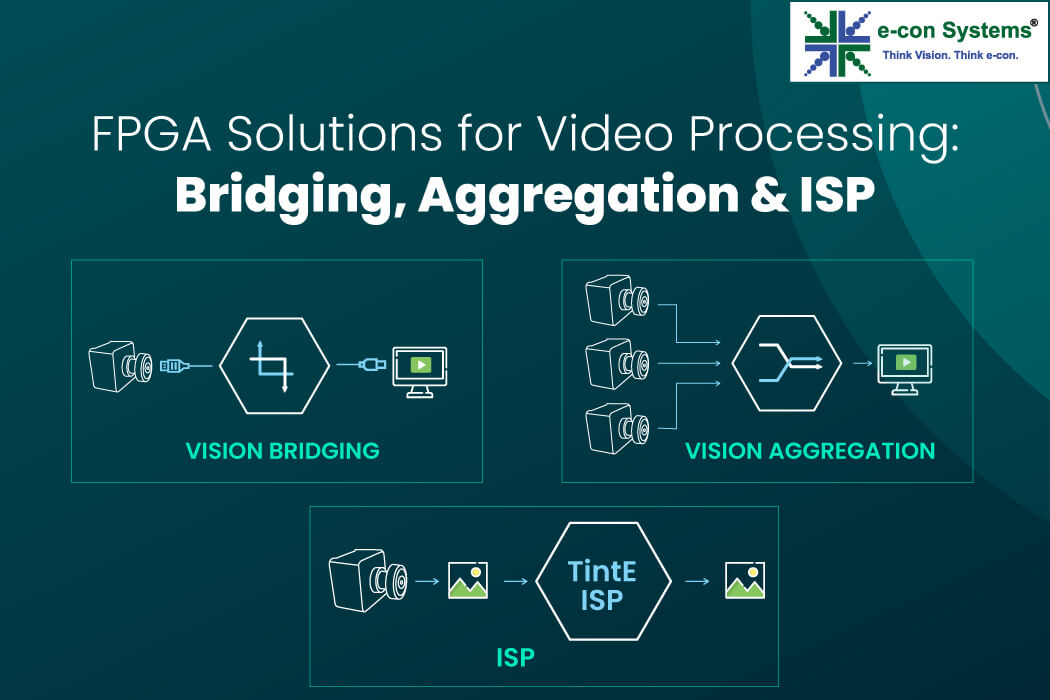

Hence, it’s important to understand how they actually contribute to video processing. In this blog, you’ll discover the major impact of FPGAs in the bridging, aggregation, and ISP processes.

What is Bridging in Video Processing, and How Do FPGAs Help?

Bridging in video processing involves connecting disparate video standards, formats, or interfaces. Bridging is used for protocol conversion, i.e., converting video streams from one interface (e.g., HDMI, MIPI, USB) to another, signal compatibility for ensuring compatibility between cameras, displays, and processors, and multi-interface support to seamlessly integrate legacy and modern video interfaces in a system.

Challenges in bridging

Video bridging always comes with the need for higher bandwidth. Resolutions 4k @60 fps with yuv422 may easily cross 8Gbps, which requires higher clock requirements and efficient pipeline building on the processing system.

Also, the processing system should support high-speed serial interfaces for the input and output pins. Even though the pins and SerdDes architecture support the speed requirement, the internal video pipeline should be capable of running the conversion process. Color space conversion and format conversions are commonly needed features in the bridging. This process needs more than io speed and series speed.

The overall system’s latency is another factor. Many imaging applications need the lowest glass-to-glass latency possible. If the processing system uses the frame buffer for the conversion process, the latency increases, and lag will be observed on the screen.

Needless to say, the processing system should support all the high-speed interfaces, conversion processes, and lower latency, as well as an affordable system cost.

Advantages of FPGAs in bridging

- Adapt interface standards: Convert between protocols like HDMI, MIPI CSI-2, DisplayPort, or GMSL.

- Handle data rate mismatches: Efficiently synchronize high-bandwidth data streams between devices.

- Enable multi-protocol support: Allow the same hardware to handle multiple video input/output interfaces. For example, bridging a high-speed MIPI CSI-2 camera to a slower Ethernet or USB interface.

How Do FPGAs Help with Aggregation in Video Processing?

Video aggregation combines data streams from multiple sources into a single, unified output. Aggregation is required in multi-camera systems to merge together feeds from multiple cameras into a single stream for further processing. It is also used for data synchronization to sync and align frames across various inputs, crucial for stereo vision and 360-degree cameras. Aggregation also provides scope for bandwidth optimization by managing data flow by compressing or reducing redundancy.

Challenges in video aggregation

Video aggregation does not stop with getting the video from many cameras and giving it in a single stream. Customers normally prefer many cameras with different resolutions, different formats, and video frame rates. The camera’s output may be in different interfaces like MIPI, LVDS, SLVS-EC, USB, and so on. Not all the processors will have all these camera interfaces.

The most challenging part is handling frame buffers for all the cameras with the higher frame rate. The issue is frame buffers will introduce longer latency in the video pipeline. The system with multiple cameras must operate with line buffers so that it will provide less glass-to-glass latency, which will, in turn, be helpful for most software applications that run image processing systems.

Many depth-based algorithms need synchronized video streams from both the RGB and depth sensors (TOF). This situation needs handling the video streams of both image sensors without introducing any delay between them, which is highly in demand from the algorithm side. Also, video aggregation demands video format conversion and protocol conversion needed from time to time.

The next big thing is memory handling. DDR architecture is based on bank, row, and column for accessing the memory locations. Switching between the banks introduces a penalty in the memory bandwidth of the system. The aggregation system should handle the DDR controllers properly to get the desired bandwidth.

Why FPGAs are preferred for aggregation

- Multi-Channel Support: Simultaneously process input from multiple cameras or video feeds.

- Customizable Pipelines: Merge streams into a desired layout (e.g., video walls, multi-view displays).

- Bandwidth Optimization: Compress or encode aggregated data for efficient transmission.

Bridging and aggregation in video processing are used in surveillance industries to combine feeds from several cameras for a panoramic view. It is also required in automotive applications to merge camera streams for surround-view systems.

What Is the Impact of FPGAs On the ISP Process?

ISP involves enhancing raw video data into usable image formats. FPGA-based ISPs are preferred for:

- Hardware acceleration: Handle computationally intensive tasks like noise reduction, HDR processing, and color correction.

- Customizable algorithms: Support application-specific processing, such as LFM (LED Flicker Mitigation) or advanced de-warping for fisheye lenses.

- Real-time performance: Maintain low latency even with high-resolution or high-frame-rate streams.

Challenges in ISP pipelines

The ISP pipeline requires a computationally intensive algorithm that requires parallel processing to achieve the required frame rate without having latency. For example, the de-layering process itself must deal with color bleeding, good color reproduction with sharp transitions in the edges, etc. These processes need complex algorithms, which have many intermediate stages to store the values of previous processes. If the ISP stores all those in the frame buffer, it will become a nightmare to get the required frame rate.

ISP also requires a lot of mathematical functions like logarithmic, exponential, multiplication, and division parts. Some problems might be addressed with lookup table-based solutions; most of them require DSP slices, which need multiple DSPs to operate more than 150MHz in parallel.

The problem statement in the ISP systems requires more than just a processing system. Some parts of the ISP, like de-bayering and color correction processes, need heavy parallel processing requirements. Still, other functions, like auto exposure and auto white balance, need processor solutions, which require a heavy state machine-based branching approach. So, the hardware-software co-design is preferred by many ISP vendors.

The ISP algorithms are tuned all the time to achieve the best image quality output. Research papers come with new methods for noise removal, de-bayering, and other ISP algorithms. This situation needs hardware to be able to reconfigure in nature so that the output from the ISP can be enhanced for the same sensor. Also, image sensors flood the markets all the time. Each time, the sensor comes with new features. Reconfigurability is highly in demand for the implementation of ISP.

The functions FPGA is used for in ISPs include – demosaicing, converting Bayer patterns into RGB images, and color space conversion to ensure proper color representation for different standards. FPGAs are also seen as being used mainly for HDR & LFM technology to enhance image quality in challenging lighting conditions.

FPGA development requires expertise in HDL (e.g., Verilog, VHDL) or high-level tools. Usage of pre-built IP cores or frameworks like OpenCL or HLS can simplify the programming complexity of FPGAs.

Why FPGA is used in video processing applications

- Parallel processing: Handles multiple video streams simultaneously

- Reconfigurability: Allows on-the-fly adjustments for various video processing tasks

- Low latency: Ensures real-time performance, which is critical in video-based systems

- Energy efficiency: Optimized hardware utilization minimizes power consumption

- Flexibility: Customize the hardware to evolving standards and custom needs

- Scalability: Handle varying resolutions and frame rates, from HD to 8K

- Integration capability: Combine video processing with other tasks like AI inference, making FPGAs ideal for edge devices

Maximize the Power of FPGAs with e-con Systems

e-con Systems has been designing, developing, and manufacturing OEM camera solutions since 2003. Thanks to our partnership with leading FPGA vendors like Lattice, Xilinx, and so on, we can simplify the development of customized FPGA-powered camera solutions to meet application needs in industries like medical, ADAS, industrial, and more.

They include:

- See3CAM_CU83 – 4K AR0830 RGB-IR USB 3.2 Gen 1 Camera

- DepthVista_USB_RGBIRD – 3D Time of Flight (ToF) USB Camera

- See3CAM_CU135M – 4K Monochrome USB 3.1 Gen 1 Camera

- See3CAM_CU30 – Low Light USB Camera

Our solutions leverage Lattice Semiconductor’s FPGA chips, including Crosslink, Crosslink NX, ECP5, and Certus-NX, to deliver exceptional performance.

Furthermore, e-con Systems’ expertise gives you access to custom design services, reference designs, demo software tools, IP cores, and hardware platforms.

Use our Camera Selector to check out our complete portfolio.

If you need help integrating custom cameras into your embedded vision applications, please write to camerasolutions@e-consystems.com.

Prabu is the Chief Technology Officer and Head of Camera Products at e-con Systems, and comes with a rich experience of more than 15 years in the embedded vision space. He brings to the table a deep knowledge in USB cameras, embedded vision cameras, vision algorithms and FPGAs. He has built 50+ camera solutions spanning various domains such as medical, industrial, agriculture, retail, biometrics, and more. He also comes with expertise in device driver development and BSP development. Currently, Prabu’s focus is to build smart camera solutions that power new age AI based applications.