Advancements in machine learning, artificial intelligence, embedded vision, and processing technology have helped innovators build autonomous machines that have the ability to navigate an environment with little human supervision. Examples of such devices include AMRs (Autonomous Mobile Robots), autonomous tractors, automated forklifts, etc.

And making these devices truly autonomous requires them to have the ability to move around without any manual navigation. This in turn requires the capability to measure depth for the purposes of mapping, localization, path planning, and obstacle detection & avoidance. This is where depth sensing cameras come into play. Depth-sensing cameras enable machines to have a 3D perspective of their surroundings.

In this blog, we will learn what depth-sensing cameras are, the different types, and their working principle. We will also get to know the most popular embedded vision applications that use these new-age cameras.

Different Types of Depth-Sensing Cameras and Their Functionalities

Depth-sensing is the measuring of distance from a device to an object or the distance between two objects. A 3D depth-sensing camera is used for this purpose, which automatically detects the presence of any object nearby and measures the distance to it on the go. This helps the device or equipment integrated with the depth-sensing camera move autonomously by making real-time intelligent decisions.

Out of all the depth technologies available today, three of the most popular and commonly used ones are:

- Stereo vision

- Time of flight

- Direct Time-of-Flight (dToF)

- LiDAR

- Indirect Time-of-Flight (iToF)

- Direct Time-of-Flight (dToF)

- Structured light

Next, let us look at the working principle of each of the above-listed depth-sensing technologies in detail.

Stereo vision

A stereo camera relies on the same principle that a human eye works based on – binocular vision. Human binocular vision uses what is called stereo disparity to measure the depth of an object. Stereo disparity is the technique of measuring the distance to an object by using the difference in an object’s location as seen by two different sensors or cameras (eyes in the case of humans).

The image below illustrates this concept:

Figure 1 – stereo disparity

Figure 1 – stereo disparity

In the case of a stereo camera, the depth is calculated using an algorithm that usually runs on the host platform. However, for the camera to function effectively, the two images need to have sufficient details and texture. Owing to this, stereo cameras are recommended for outdoor applications that have a large field of view.

Stereo vision cameras are extensively used in medical imaging, AR and VR applications, 3D construction and mapping, etc.

To learn more about the working principle of stereo cameras, please read: What is a stereo vision camera?

Time of Flight Camera

Time of flight (ToF) refers to the time taken by light to travel a given distance. Time of flight cameras work based on this principle where the distance to an object is estimated using the time taken by the light emitted to come back to the sensor after reflecting off the object’s surface.

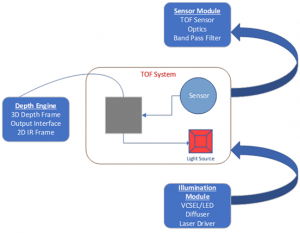

A time of flight camera has three major components:

- ToF sensor and sensor module

- Light source

- Depth sensor

The architecture of a time-of-flight camera is given below:

Figure 2 – architecture of a time of flight camera

Figure 2 – architecture of a time of flight camera

The sensor and sensor module are responsible for collecting the light data reflected from the target object. The sensor converts the collected light into raw pixel data. The light source used is either a VCSEL or LED that typically emits light in the NIR (Near InfraRed) region. The function of the depth processor is to convert the raw pixel data from the sensor into depth information. In addition, it also helps in noise filtering and provides 2D IR images that can be used for other purposes of the end application.

ToF cameras are commonly used in applications such as smartphones and consumer electronics, biometric authentication and security, autonomous vehicles and robotics, etc.

Based on the methods of determining the distances, ToF is of two types. Direct Time of Flight (DToF) and Indirect Time of Flight (iToF). Let us look into each of these in detail and their differences.

Direct Time of Flight (dToF)

Direct ToF method works by scanning the scene with pulses of invisible infrared laser light. The light reflected by the objects in the scene is analyzed to measure the distance.

The distance from the dToF camera to the object can be measured by computing the time period taken by the pulse of light from the emitter to reach the object in the scene and then back again. For this, the dToF camera uses a sensing pixel called a Single Photon Avalanche Diode (SPAD) The SPAD can detect the sudden spike in photons when the pulse of light is reflected back from the object. This way, it keeps track of the intervals of the photon spike and measures the time.

dToF cameras are usually low-resolution. The advantages of dToF sensors are that they are compact and are inexpensive. dToF cameras are ideal for applications which does not require high-resolution and real-time performance.

Let us look into the details of LiDAR, a dToF technology that uses infrared laser beams for measuring distances.

LiDAR

LiDAR (Light Detection and Ranging) cameras use a laser emitter to project a raster light pattern on the scene being recorded and scan it back and forth.

The camera sensor records the time period it takes for the pulse of light to reach the object and reflect back to itself. This enables the LiDAR system to measure the distance to the object that reflected light based on the speed of light.

LiDAR sensors can be used to build up a 3D map of the area being recorded. These sensors can scan the scene to produce a single frame of data based on the distance measured and the direction of the light being directed. These capture frames will have data points in hundreds or even thousands. Once the LiDAR system completes the scan, it generates a point cloud based on the position of the acquired points. One added advantage of LiDAR systems is that they can be used in continuous streaming mode.

Various LiDAR systems are used for different applications. Since LiDAR systems use a collimated and focused laser beam to scan the area in a target, they prove effective even at long ranges. Low-power LiDAR sensors are also available and can be used for depth sensing in short ranges. They can also be used in varied lighting conditions but are sensitive to ambient light in outdoor lighting.

LiDAR sensors usually use infrared lasers in one of two wavelengths: 905 nanometers and 1550 nanometers. The shorter wavelength lasers are less likely to be absorbed by water in the atmosphere and are more useful for long-range surveying. In comparison, the longer wavelength infrared lasers can be used in eye-safe applications like robots operating around humans.

Indirect Time of Flight (iToF)

Indirect Time of Flight (iToF) cameras illuminate the entire scene using diffuse infrared laser light with the help of multiple emitters. This illumination is done in a series of modulated laser pulses. The light is continuously modulated by pulsing the laser emitters at a high frequency.

In iToF, the time interval between each pulse of light isn’t measured directly, as in the case of dToF. The iToF camera records and compares the phase shift of the waveform, which gets recorded in the sensor pixel. A comparison is made between each pixel on how much the waveform is phase-shifted, by which the distance to the point in the scene can be calculated.

With iToF cameras, the distance to all points in the scene can be determined with a single shot.

If you are interested in knowing more about how a time-of-flight camera works:

Read: What is a ToF sensor? What are the key components of a ToF camera?

Read: How Time-of-Flight (ToF) compares with other 3D depth mapping technologies

Structured light camera

A structured light-based depth-sensing camera uses a laser/LED light source to project light patterns (mostly a striped one) onto the target object. Based on the distortions obtained, the distance to the object can be calculated. A structured light 3D scanner is often used to reconstruct the 3D model of an object.

Structured light cameras are for applications such as 3D scanning and modeling, quality control and inspection, biometric authentication, cultural heritage preservation, retail, and e-commerce, etc.

Comparison Between the Depth Sensing Technologies

All the 3D depth mapping cameras we discussed above come with their own pros and cons. The choice of camera used will entirely depend on the specifics of your end application. It is always recommended that you get the help of an imaging expert like e-con Systems to guide you through the camera evaluation and integration process.

The technologies can be analyzed using ten different parameters. A detailed comparison is given in the below table:

| STEREO VISION | STRUCTURED LIGHT | dToF | iToF | |

| Principle | Compares disparities of stereo images from two 2D sensors | Detects distortions of illuminated patterns by 3D surface | Time taken by reflected light from an object to the sensor is measured | Phase shift of modulated light pulses is measured |

| Software Complexity | High | Medium | Low | Medium |

| Material Cost | Low | High | Low | Medium |

| Depth(“z”) Accuracy | cm | um~cm | mm~cm | mm~cm |

| Depth Range | Limited | Scalable | Scalable | Scalable |

| Low light | Weak | Good | Good | Good |

| Outdoor | Good | Weak | Fair | Fair |

| Response Time | Medium | Slow | Fast | Very Fast |

| Compactness | Low | High | High | Medium |

| Power Consumption | Low | Medium | Medium | Scalable-Medium |

Popular Embedded Vision Applications That Use Depth-Sensing Cameras

As mentioned before, depth sensing is required for any device that has to navigate autonomously. However, given below are some of the most popular embedded vision applications that need 3D depth cameras for their seamless functioning:

- Autonomous Mobile Robots (AMR)

- Autonomous tractors

- People counting and facial anti-spoofing systems

- Remote patient monitoring

Autonomous Mobile Robots

Autonomous Mobile Robots (AMR) have helped automate various tasks across industrial, retail, agricultural, and medical applications. Following are a few examples of AMRs used in warehouses, retail stores, hospitals, office buildings, agricultural fields, etc.

- Goods to person robots

- Pick and place robots

- Telepresence robots

- Harvesting robots

- Automated weeders

- Patrol robots

- Cleaning robots

Whatever the type, any robot that has to move autonomously without any human supervision needs to have a 3D depth camera in it. Some robots that use a depth-sensing camera might use a combination of automated and human-aided navigation. Even in those cases, depth cameras help detect obstacles and avoid accidents. An example of this type of robot is a delivery robot.

To develop a better understanding of how depth cameras function in AMRs, please have a look at the article How does an Autonomous Mobile Robot use time of flight technology?

Learn how e-con Systems helped a leading Autonomous Mobile Robot manufacturer enhance warehouse automation by integrating cameras to enable accurate object detection and error-free barcode reading.

Autonomous tractors

Autonomous tractors are used to automate key farming processes such as weed & bug detection, crop monitoring, etc. They work similarly to AMRs when it comes to depth sensing. Depth cameras help them measure the distance to obstacles and nearby objects in order to move from one point to the other. This ability to move autonomously is a game-changer, given the labor shortage in the agricultural industry.

People counting and facial anti-spoofing systems

People counting and facial anti-spoofing systems are used to count people and detect fraud in identity management and access control. 3D depth cameras like stereo cameras and time of flight cameras are needed here to locate the exact position or location of a person during counting or facial recognition.

Remote patient monitoring

Modern remote patient monitoring systems leverage artificial intelligence and camera technology to detect key events like patient falls in order to facilitate completely human-free patient monitoring, that too 24×7. However, detecting falls requires capturing the patient’s video and sharing and storing it for analysis. This raises concerns about privacy. This is where a 3D depth camera can make a difference.

With the help of these cutting-edge camera systems, patient movements can be tracked using depth data alone. This ensures privacy and gives the patient peace of mind as no visually identifiable image or video is processed by the remote patient monitoring camera.

If you wish to read further on how 3D depth cameras (especially time-of-flight cameras) help patient monitoring systems improve privacy, please read How does a Time-of-Flight camera make remote patient monitoring more secure and private?

That’s all about depth-sensing cameras. In case you have any further queries on the topic, please feel free to leave a comment.

e-con Systems Offers High-Performance Depth-Sensing Cameras

e-con Systems is an industry pioneer with 20+ years of experience in designing, developing, and manufacturing OEM cameras. We have depth-sensing ToF cameras supporting NVIDIA Jetson processing platforms, which are compatible with USB, GMSL, and MIPI CSI 2 interfaces. ToF cameras at e-cons also feature advanced features such as global shutter capabilities, multi-camera support, IR imaging, and complex image processing capabilities.

If you are looking for help in integrating 3D depth cameras into your autonomous vehicle, please write to us at camerasolutions@e-consystems.com.

Remember, we also provide customization services to make sure the camera meets all the requirements for your specified application.

You can also visit the Camera Selector to have a look at our complete portfolio of cameras.

Prabu is the Chief Technology Officer and Head of Camera Products at e-con Systems, and comes with a rich experience of more than 15 years in the embedded vision space. He brings to the table a deep knowledge in USB cameras, embedded vision cameras, vision algorithms and FPGAs. He has built 50+ camera solutions spanning various domains such as medical, industrial, agriculture, retail, biometrics, and more. He also comes with expertise in device driver development and BSP development. Currently, Prabu’s focus is to build smart camera solutions that power new age AI based applications.