Autofocus systems are integral part of modern-day cameras, enabling precise focus adjustment for clear and sharp images. In this blog, we will delve into the key concepts autofocus systems, from foundational optics principles to advanced technologies like liquid lenses. Read on!

What is the Concept of Point Source in Optics? What Happens When a Point Source Goes Out of Focus?

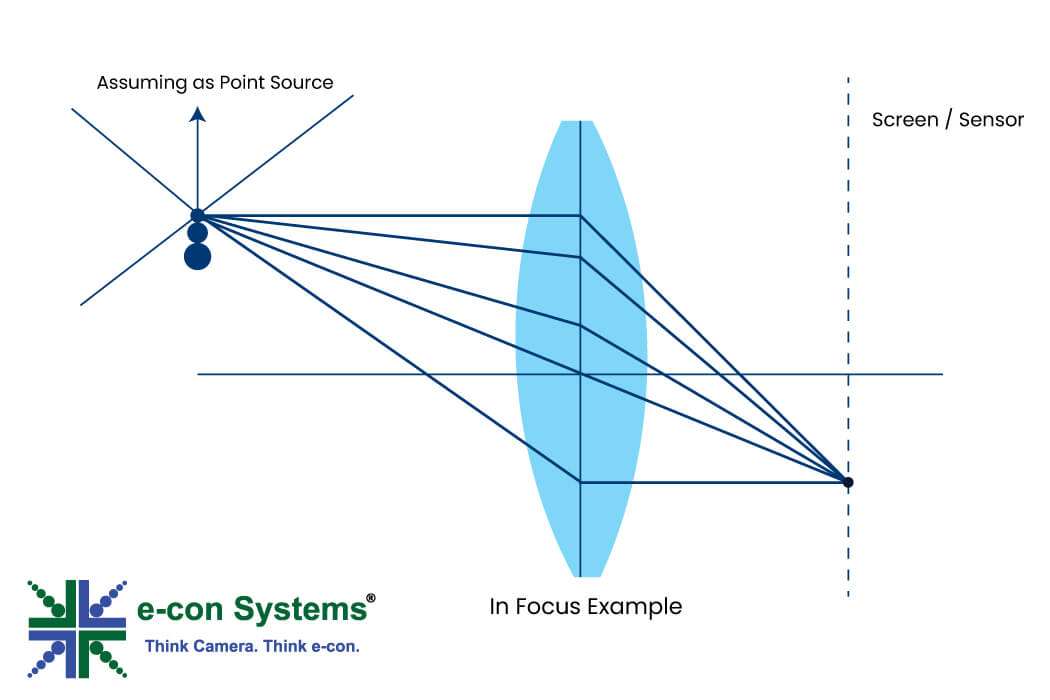

In optics, we treat every object as a collection of point sources of light. Ideally, a perfect optical system would render a point source as a single point on the image plane. However, due to factors such as aberrations, diffractions, and imperfections in the optical system, point sources often spread out. This spreading degrades contrast in the resulting image, directly impacting autofocus performance.

Figure 1: In Focus Point Source

Figure 1: In Focus Point Source

When contrast is degraded, sharpness diminishes. This degradation is particularly significant in autofocus systems, where achieving maximum contrast corresponds to achieving sharp focus.

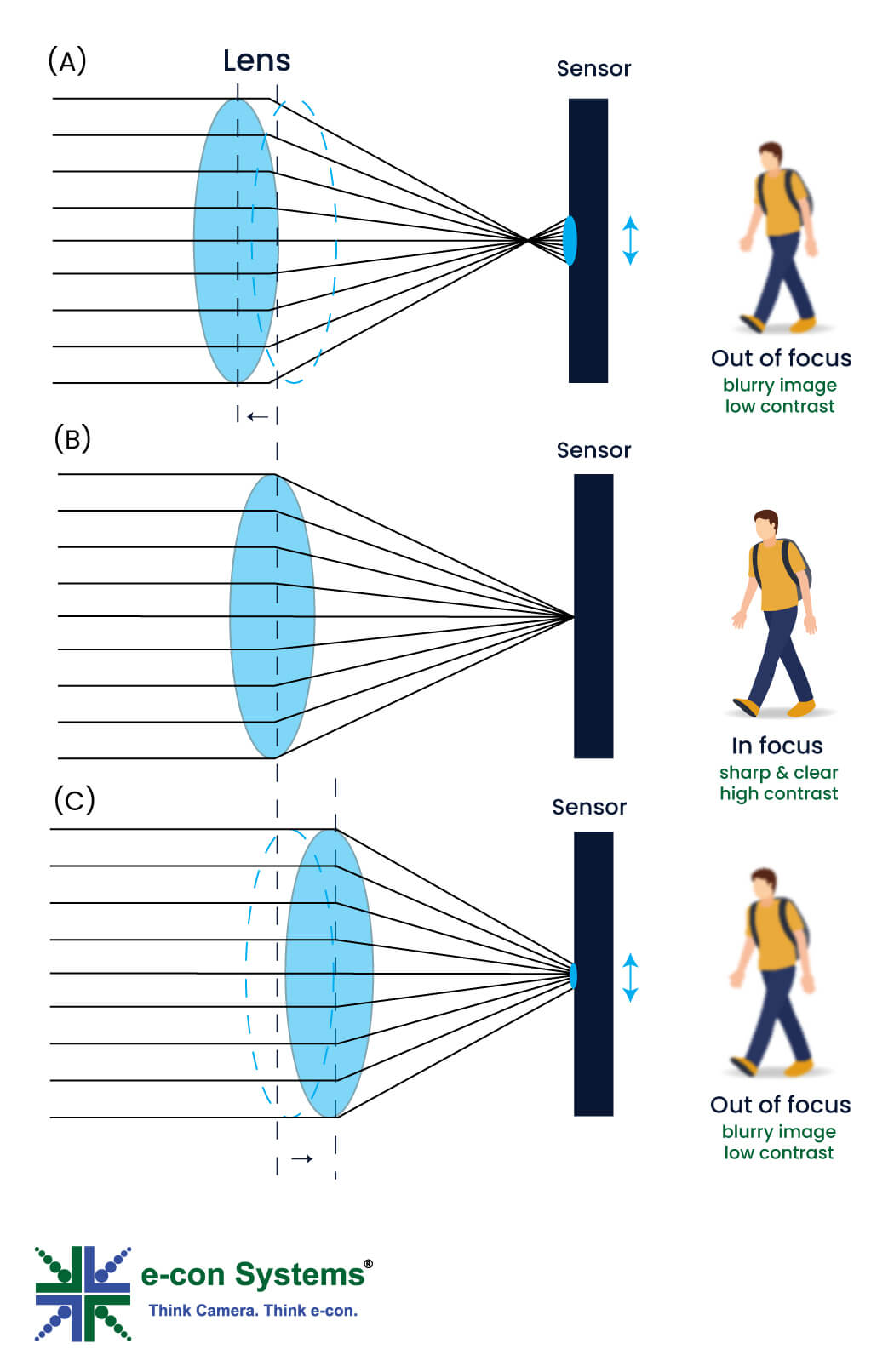

Figure 2: In-Focus and Out-of-Focus Images

In the above figure, B represents when image is in focus. Whereas A and C is how image appears when out-of-focus. X and Y are graphical representation of in-focus and out-of-focus respectively.

In A, the point of convergence is before the screen/sensor. In C, the point of convergence is after the screen/sensor. Hence the images appear to be blurred/smudged.

What is Modulation Transfer Function Metric (MTF)?

The Modulation Transfer Function (MTF) quantifies the imaging performance of an optical system by analyzing its ability to resolve detail and maintain contrast.

MTF of a System = MTF of Lens × MTF of Camera

Figure 3: MTF Graph

Figure 3: MTF Graph

[Source:www.qualitymag.com]

Figure 3 given above represents the MTF graph, where x-axis is spatial frequency and y-axis represents contrast. We can observe as spatial frequency increases the contrast of the image decreases.

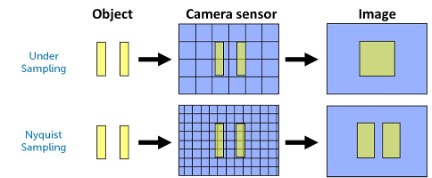

Figure 4: Nyquist Sampling

Figure 4: Nyquist Sampling

Both the lens and sensor contribute to the overall MTF. The above diagram shows how sensor resolution contributes to MTF performance. But in this blog, we are going to explore how focusing ability of the lens contribute to MTF.

Well-focused system yields a higher MTF, whereas suboptimal focusing diminishes it. This makes MTF a critical metric for evaluating and optimizing autofocus systems.

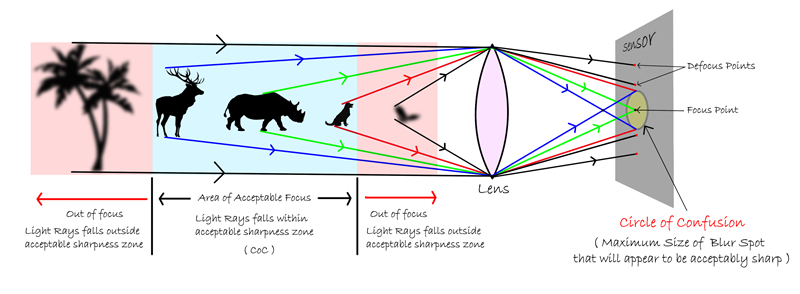

Understanding Circle of Confusion

The Circle of Confusion (CoC) is a measure of how a point source appears in an image when it is out of perfect focus. When a point source is within the CoC, it is perceived as focused. Conversely, if it lies outside the CoC, it appears blurry.

This principle is vital for understanding the boundaries of acceptable sharpness in an image. It defines the region where autofocus adjustments can maintain image clarity.

Figure 5: Circle of Confusion

Figure 5: Circle of Confusion

[Source: arindamdhar.com]

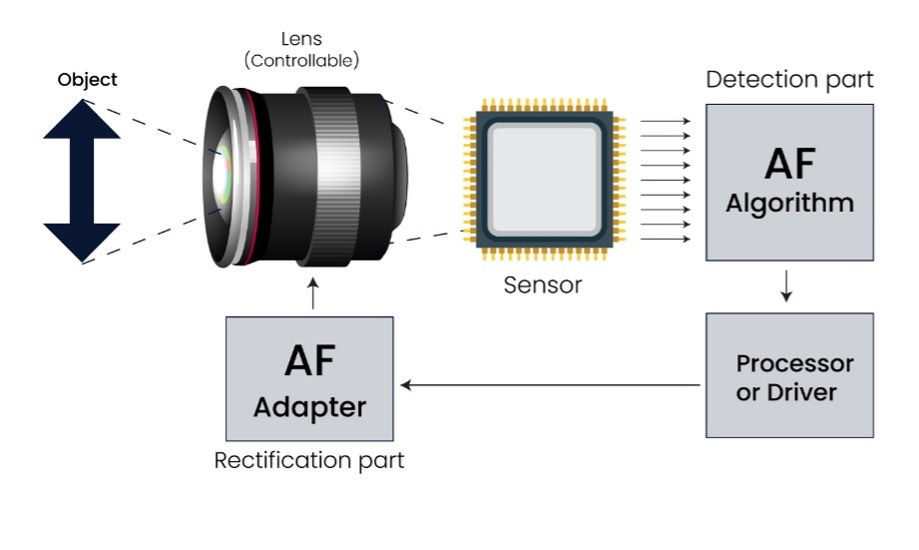

Autofocus Systems

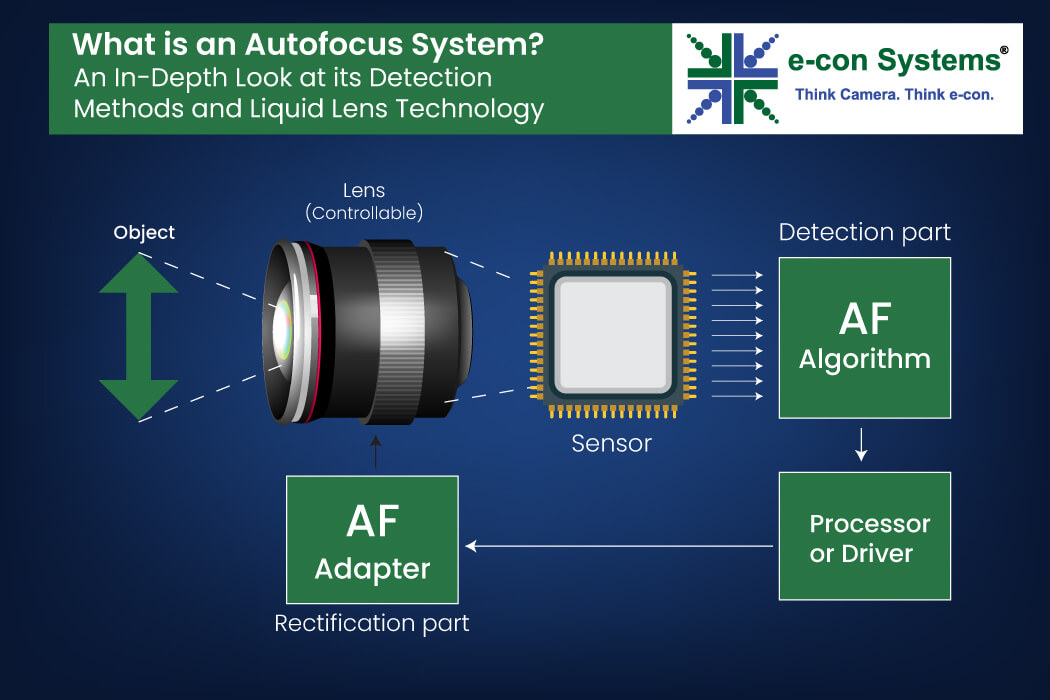

The operation of autofocus systems can be divided into two main phases: Detection and Rectification. Detection involves determining whether an image is in focus. This is achieved by analyzing the image or using external sensors to measure depth or contrast.

Rectification, the second phase, adjusts the lens to achieve the desired focus. Once the detection system identifies whether the image is sharp or blurred, rectification systems fine-tune the lens position to optimize focus. This is typically achieved through motorized mechanisms such as voice coil motors (VCM) or stepper motors.

Understanding Detection in Autofocus Systems

Autofocus detection systems fall into two categories:

- Active Auto-Focus Detection Systems

- Passive Auto-Focus Detection Systems

Figure 6: Autofocus System

Figure 6: Autofocus System

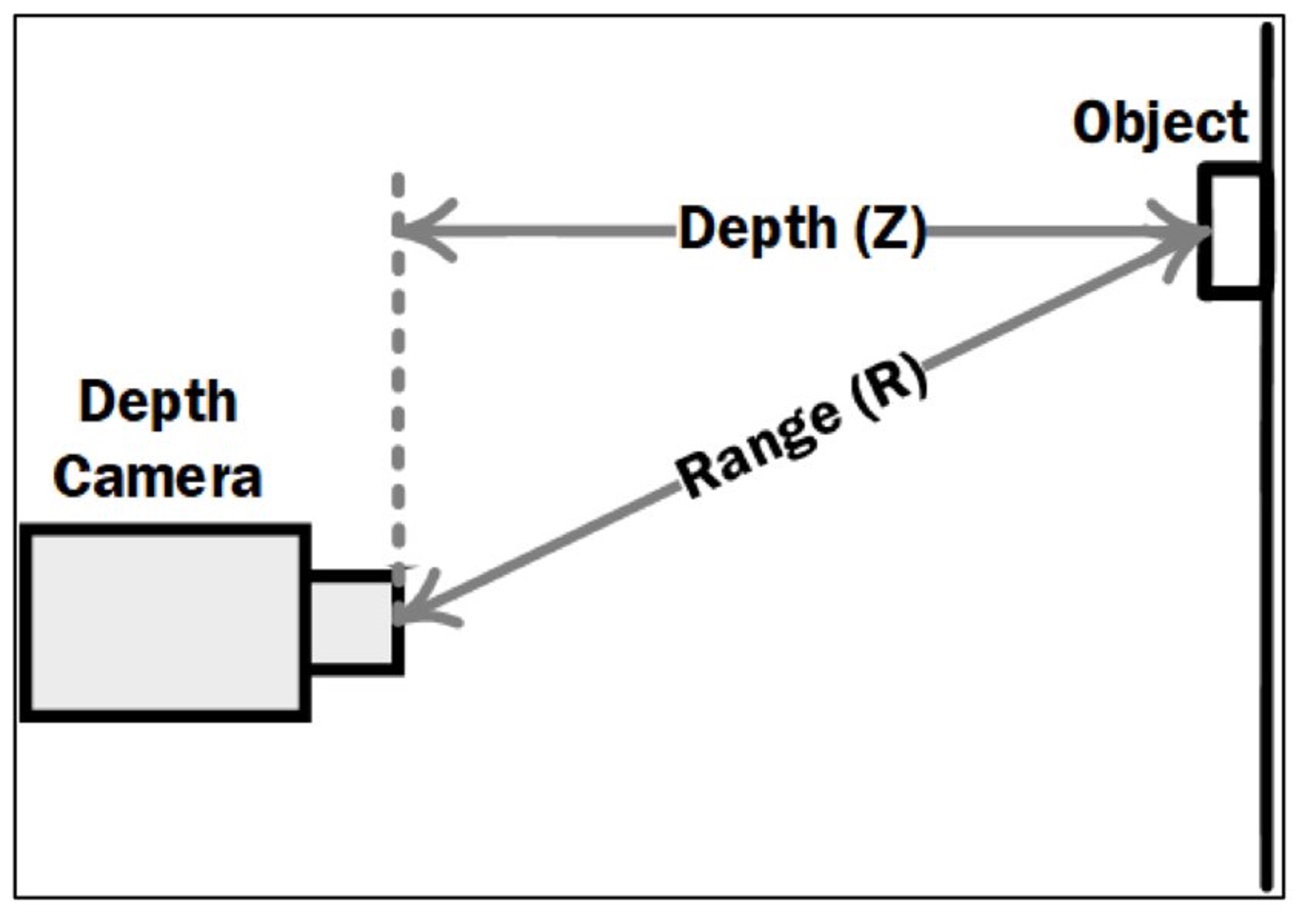

Active Auto-Focus Detection Systems: Active autofocus systems rely on depth perception to adjust the lens. Depth is measured using additional hardware such as infrared or ultrasonic sensors. Based on the measured depth, the system adjusts lens distance and refraction to achieve focus. While these systems are effective, they require extra hardware, increasing system complexity and cost.

Figure 7: Depth Perception

Figure 7: Depth Perception

Passive Auto-Focus Detection Systems: Passive autofocus systems do not measure depth. Instead, they analyze image data to adjust focus based on contrast or phase differences. Let us look into contrast-based auto-focus detection systems and phase-based detection system in detail.

-

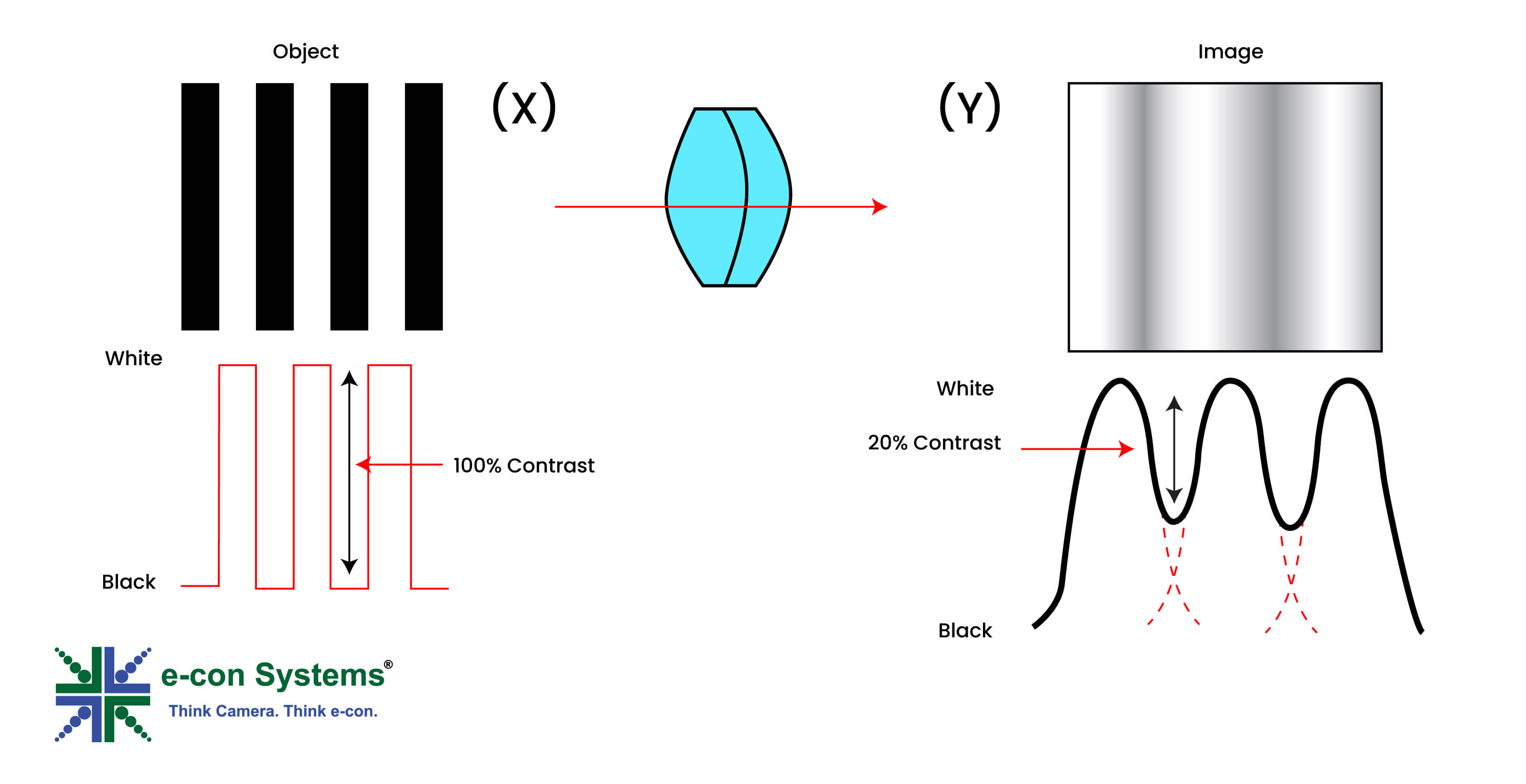

Contrast-Based Passive Auto-Focus Detection System

Contrast-based autofocus evaluates the contrast within an image by moving the lens back and forth. It seeks the point of maximum contrast, which corresponds to the sharpest focus. Refer Figure 2 to understand how contrast plays a role in auto-focus.

Advantages:

- High precision focus.

- No need for specific calibration across lenses.

Disadvantages:

- Time-consuming due to its trial-and-error approach.

- Struggles with moving objects.

- Phase-Based Detection

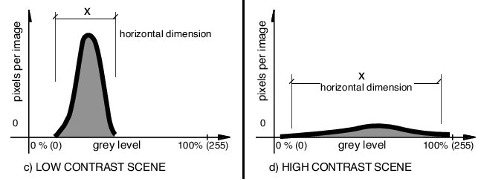

Figure 8: Contrast vs Resolution

Figure 8: Contrast vs Resolution

[Source: researchgate.net]

-

Phase Difference Based Passive Auto-Focus Detection System

Phase detection compares the phase of light waves entering the lens. By measuring the phase difference, the system calculates the adjustments needed to achieve focus.

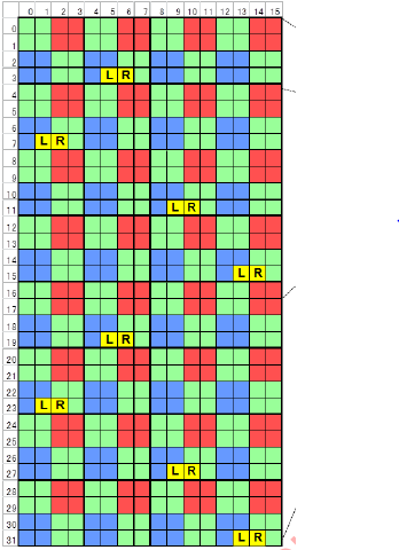

Figure 9: Phase Detector Found Inside the Sensor Marked as L and R

Figure 9: Phase Detector Found Inside the Sensor Marked as L and R

In the system (sometimes this system is found inside the sensor, as shown Figure 9) we can integrate a phase detector, which can detect the phase at any given instance. The camera compares the phase differences between the two light paths. If the two phases are aligned, the subject is in focus. If the phases are misaligned, the camera calculates the adjustment required to bring the image into focus.

Advantages:

- Faster focus acquisition compared to contrast-based systems.

- Effective for moving subjects.

Disadvantages:

- Less accurate due to potential noise.

- Requires additional sensors, increasing hardware complexity and cost.

- Hybrid Autofocus Systems

Hybrid Autofocus Detection Systems

Hybrid systems combine contrast-based and phase-based autofocus to leverage their respective strengths. Initially, phase detection provides rapid focus acquisition. Subsequently, contrast detection fine-tunes the focus for precision.

Understanding The Rectification Part of Autofocus Systems

Once detection identifies whether an image is out of focus, rectification adjusts the lens to correct it. This is typically achieved by altering the focal length using motors such as stepper motors, VCM (Voice Coil Motors), micro motors. Among these, VCM motors are the most commonly used, as they balance speed and precision effectively.

Why Are Liquid Lenses Used? What is The Basic Principle of Liquid Lens Technology

Liquid lens technology offers an alternative to mechanical focus adjustment by dynamically altering the refractive index of the lens.

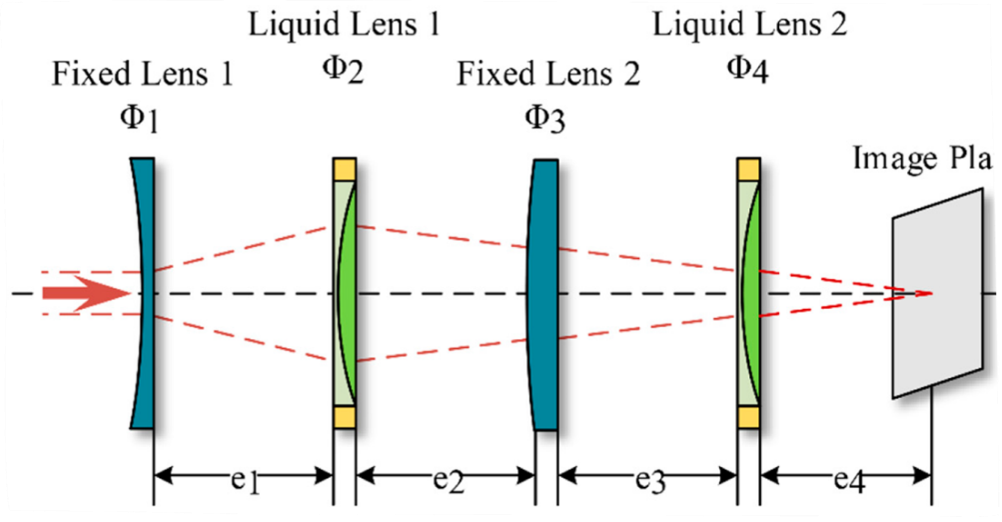

Figure 10: Liquid Lens Technology

Figure 10: Liquid Lens Technology

[Source: iopscience.iop.org]

How Liquid Lens Technology Works:

- A liquid droplet is placed between two transparent layers.

- When voltage is applied, it changes the shape of the droplet (electrowetting effect), adjusting the lens’ focal length.

Advantages of Liquid Lenses:

- Compact and durable, with no mechanical parts.

- Rapid focus adjustment.

- Wide range of focal lengths within a small form factor.

- Liquid lenses enable quick and noiseless autofocus, making them a promising innovation for modern imaging systems.

Autofocus systems are integral to achieving sharp and clear images, balancing complex optical principles with advanced technologies. By combining robust detection methods and efficient rectification techniques, today’s autofocus systems continue to evolve, offering ever greater precision and speed.

Explore e-con Systems Auto-Focus Cameras

e-con Systems comes with 20+ years of experience in designing, developing, and manufacturing OEM cameras. We recognize embedded vision applications’ high-resolution and auto-focus camera requirements and build our cameras to best suit the industry demands.

Visit our Camera Selector Page to explore out end-to-end portfolio.

We also provide various customization services, including camera enclosures, resolution, frame rate, and sensors of your choice, to ensure our cameras fit perfectly into your embedded vision applications.

If you need help integrating the right cameras into your embedded vision products, please email us at camerasolutions@e-consystems.com.

Prabu is the Chief Technology Officer and Head of Camera Products at e-con Systems, and comes with a rich experience of more than 15 years in the embedded vision space. He brings to the table a deep knowledge in USB cameras, embedded vision cameras, vision algorithms and FPGAs. He has built 50+ camera solutions spanning various domains such as medical, industrial, agriculture, retail, biometrics, and more. He also comes with expertise in device driver development and BSP development. Currently, Prabu’s focus is to build smart camera solutions that power new age AI based applications.