Answering the Top FAQs on Camera Imaging Technologies

1. What is color accuracy?

Color accuracy measures how accurately a camera reproduces real-world colors, ensuring realistic and consistent images. It's crucial for identifying objects, inspecting products, matching colors, and quality control. It is often measured using a standard color checker in the CIE LAB color space and is represented as Delta C (ΔC). Lower Delta E values indicate better color accuracy.

To learn more about measurement techniques and influencing factors, please visit the blog What is Color Accuracy? How to Measure Color Accuracy? - e-con Systems

2. What is white balance?

White balance is a fundamental camera setting that establishes the true color of white, serving as a baseline for all other colors. This correction of color balance under varying lighting conditions is a key factor in ensuring accurate and natural-looking images.

3. What does color saturation mean?

Saturation refers to the degree of colour vibrancy or intensity in an image. High saturation colors are vibrant, while desaturated colors are muted. The saturation can be adjusted to provide a variety of visual effects, but over-saturation can result in unnatural colors.

4. What is the Signal-to-Noise Ratio (SNR)?

The signal-to-noise ratio (SNR) measures the strength of the desired signal compared to background noise and is typically expressed in decibels. Higher SNR values indicate better image quality with more useful information and less noise.

To know more about calculation and practical improvement techniques on SNR, please visit the blog What is Signal-to-Noise Ratio (SNR)? Why is SNR necessary in embedded cameras? - e-con Systems

5. What is gamma correction?

Gamma correction adjusts the luminance of a pixel to ensure accurate image representation in a standard color space like RGB, which is common in displays.

6. What is dynamic range?

Dynamic range measures the range of light levels in a scene, from the darkest to the brightest areas. The camera's dynamic range is determined by its capacity to record these areas without clipping and with a good signal-to-noise ratio.

7. What are the critical components of HDR (High Dynamic Range)?

Multi-shutter speed capture : HDR captures multiple scene images using different shutter speeds, capturing varying light intensities to record bright, medium-lit, and dark areas. This can also be achieved with split pixel architecture, where pixels have different sensitivities.

Image combination : HDR technology uses algorithms to combine different exposures, creating a composite image that accurately represents bright and dark areas, preventing overexposure or underexposure.

To understand the effective ways to achieve high dynamic range imaging, please visit the blog What are the most effective methods to achieve High Dynamic Range imaging? - e-con Systems

8. Is it possible to modify the i-HDR settings?

You can change the i-HDR settings through the Extension Unit control provided through the e-CAMView application for Windows OS and the QtCAM application for Linux OS.

9. What is LED Flicker Mitigation (LFM)?

LED Flicker Mitigation (LFM) reduces or eliminates flickering effects associated with LED lighting systems, such as traffic signals and signboards, due to repetitive ON/OFF cycles. This technique results in higher-quality images when capturing LED-based lights and signs.

10. What is spectral sensitivity?

Spectral sensitivity measures the response of an image sensor to different light wavelengths, which influences color reproduction and light capture. Cameras ensure accurate color reproduction in photographs with well-calibrated spectral sensitivity.

11. What is an ISP (Image Signal Processor)?

An image signal processor (ISP) in a camera processes raw images from a camera sensor. It handles tasks such as demosaicing, denoising, and auto adjustments to enhance the final image.

12. What is multispectral imaging?

Multispectral imaging (MSI) captures images across specific wavelength ranges of the electromagnetic spectrum, covering visible light, ultraviolet (UV), near-infrared (NIR), short-wave infrared (SWIR), mid-wave infrared (MWIR), and long-wave infrared (LWIR) regions. Cameras use filters or detectors to isolate wavelength ranges, integrating multiple photodetectors per pixel. Consequently, every wavelength band has a spectral signature in a multidimensional dataset.

13. What is image binning?

Image binning increases the adequate size of sensor pixels by combining the electric current of adjacent pixels, improving image quality in low-light situations by enhancing sensitivity.

To know how to enhance imaging quality through image binning, please visit the blog To know how to enhance imaging quality through image binning, please visit the blog

14. What method is used for image binning?

Image binning uses the demosaicing method, combining data from four neighboring pixels into a single "superpixel." This enhances performance in low-light environments but reduces the effective resolution to 1/4th of the sensors'.

15. What is the role of demosaicing in color cameras?

Demosaicing produces full-color pixels in color cameras using a Bayer pattern by combining photosite data. It ensures accurate color representation by inferring missing color information.

16. What is multi-ROI?

ROI, or Region of Interest, enables users to focus on and capture the highest quality image within a selected frame area.

With multi-ROI, users can simultaneously focus on and optimize image quality for multiple predefined areas (ROIs) within a single frame. By processing only the selected regions, multi-ROI can improve processing speed, reduce data load, and enhance overall system efficiency.

To learn how to achieve a high frame rate, please visit the blog How to achieve a high frame rate of up to 1164 fps using e-CAM56_CUOAGX's Multi-ROI Feature.

17. Can an ROI be drawn around a specific region and get faster frame rates?

Yes, you can enable ROI functionality through customization to achieve faster frame rates.

18. What is an inertial measurement unit (IMU)?

An IMU detects movements and rotations across six degrees of freedom, including pitch, yaw, and roll, providing comprehensive data on an object's spatial orientation and movement.

19. How do integrated IMUs help 3D cameras perform better in different lighting conditions?

IMUs track relative orientation and position, ensuring accurate spatial representation even in challenging lighting conditions. They also aid in camera stabilization by compensating for unwanted motion and reducing latency for quicker visual adjustments.

20. What is a pixel?

Pixels, or photosites, are tiny, light-sensitive components on a camera sensor that capture light to form images. The pixel size affects the sensor's ability to capture light, which is measured in microns.

21. How vital is the role of a pixel in cameras?

Cameras with larger pixel sensors capture more light, improving image quality in low-light conditions. Smaller pixel sensors capture more details, making them suitable for high-detail capture applications.

22. What is split-pixel HDR technology?

Split-pixel HDR, often referred to as sub-pixel HDR, involves splitting each pixel on the sensor into two sub-pixels, allowing the camera to capture both bright and dark areas of a scene simultaneously. This technology is crucial in embedded vision systems as it enables the recording of a broader range of brightness levels while simultaneously decreasing motion blur and producing clearer, realistic images with more accurate color representation.

To explore HDR modes and split-pixel technology, please visit blog - split-pixel HDR technology - e-con Systems.

23. How does the split-pixel HDR technique work?

A single pixel in an image capture system consists of large and small on-chip microlens (OCL). The SP2 OCL is located in the gap section of SP1, with a high-sensitivity pixel for brightly projecting dark subjects and a low-sensitivity pixel for saturating bright subjects. This combination creates a wide dynamic range, ensuring no loss of blackout or blowout in high-contrast scenes. This split or sub-pixel architecture helps the camera to capture a High Dynamic Range of 120dB.

Top view of the pixel architecture

24. What is the Bayer pattern?

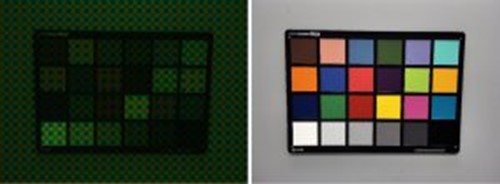

Camera sensors produce color images using the Bayer pattern in 8, 10, or 12 bits. These images feature red, green, and blue color information distributed across each pixel. The Image Signal Processor (ISP) manages and refines the data to convert this raw sensor output into a fully processed image. The comparison image below illustrates the difference between a Bayer image and a processed image.

Bayer vs ISP processed image

25. What is the difference between SYNC and async mode?

SYNC Mode : Images are captured at fixed intervals based on a standard clock signal or external trigger. All operations occur at regular, predictable times. Multiple cameras can be synchronized to capture images simultaneously, which is helpful for applications like 3D imaging or high-speed events from different angles.

Async mode : Images are captured independently without relying on a fixed clock signal. Each capture event can occur anytime, often triggered by specific events or conditions. Cameras operate independently, which can be helpful in systems where different cameras must capture images at other times.

| Feature | Synchronous (SYNC) Mode | Asynchronous (ASYNC) Mode |

|---|---|---|

| Timing | Fixed, regular intervals | Flexible, event-driven |

| Coordination | High coordination, cameras can be synchronized. | Low coordination, cameras operate independently |

| Determinism | High, precise, and predictable | Variable depends on events and system load |

| Latency | Low, minimal delay between trigger and capture | Variable, potentially higher latency |

| Use Cases | 3D imaging, high-speed events, scientific studies | Security, surveillance, industrial automation |

Mr. Thomas Yoon

Mr. Thomas Yoon +82-10-5380-0313

+82-10-5380-0313